Setup

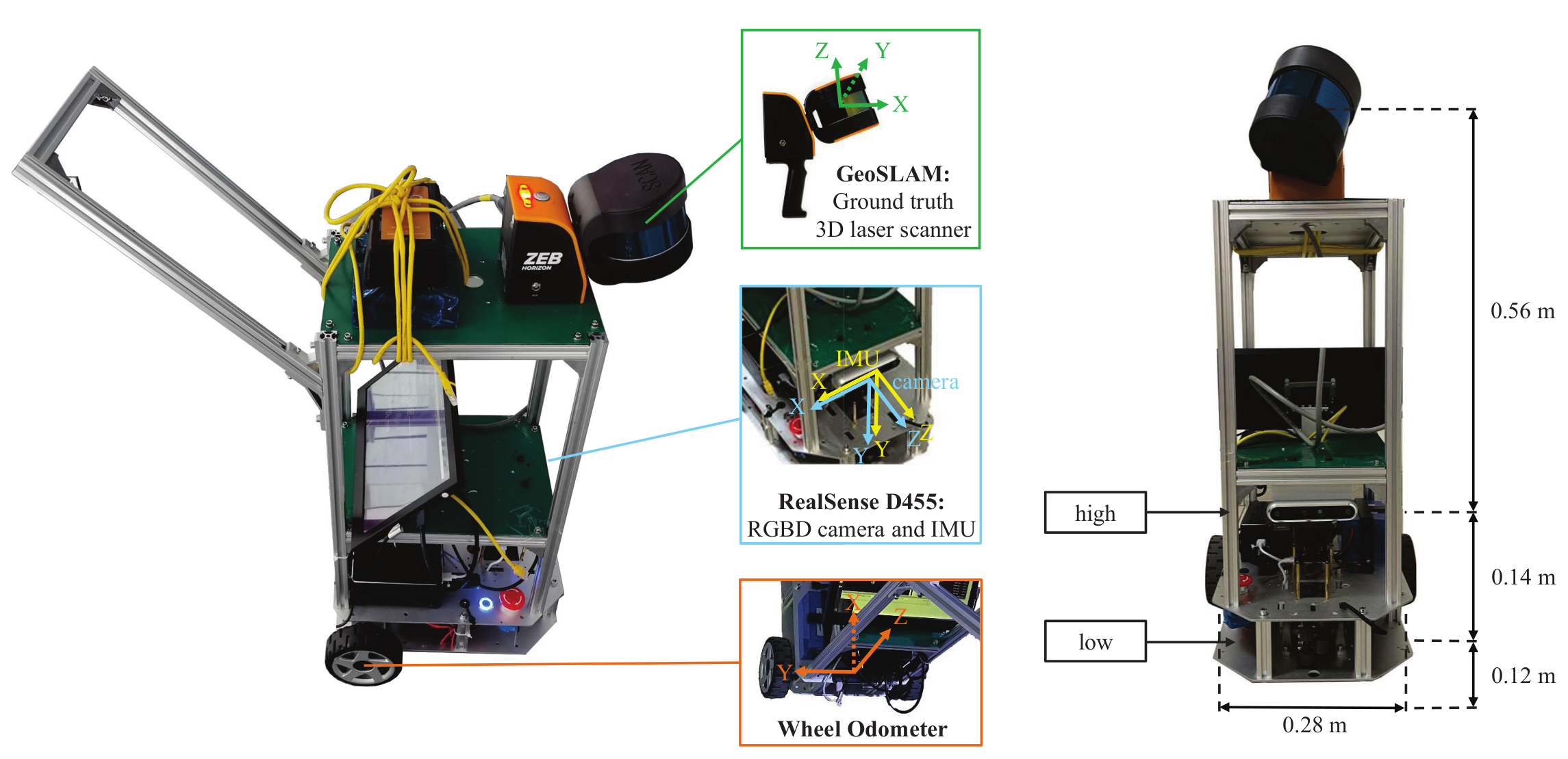

We design a ground wheeled robot equipped with an RGBD- IW (an RGBD camera, an IMU and a wheel odometer) sensor suite for data acquisition. In order to obtain ground truth trajectories and 3D point clouds, a high-precision 3D laser scanner called GeoSLAM is fixed on the top of the robot. In addition, to enrich the dataset, two different viewpoints (high and low) are selected for capturing images. The figure below illustrates the robot setup, the coordinate systems of all sensors and two different viewpoints for the RGBD camera.

RGBD camera

The Intel RealSense Depth Camera D455 , a global shutter RGBD camera, is chosen to capture aligned RGB images and depth maps with the resolution of 640 × 480 pixels at 30 fps. The ideal depth range is 0.6 meters to 6 meters from the image plane, and the error is less than 2% within 4 meters.

IMU

The RealSense D455 integrates an IMU (Bosch BMI055) to provide 3-axis accelerometer measurements and 3-axis gyroscope measurements at 200 Hz. The resolution of accelerometer and the gyroscope are 0.98 mg and 0.004 ◦/s and the noise density are 150 μg/√Hz and 0.014 ◦/s/√Hz, respectively.

Wheel Odometer

The WHEELTEC R550 (DIFF) PLUS supports the movement of our data acquisition equipment. Two differential wheels are mounted on a common axis (baseline), each with a GMR high precision encoder providing local angular rate readings, through which we can solve for the linear velocities and angular velocities at the center of the baseline.

3D Laser Scanner

We first use GeoSLAM ZEB Horizon , a handheld 3D LiDAR scanner with powerful offline SLAM technology, to generate precise ground truth trajectories and dense point cloud models for large-scale indoor environments. The 16- line lasers of GeoSLAM can collect 300,000 points per second. With an effective range of 100 meters, GeoSLAM provides dense 3D scanning with a relative accuracy of up to 6 millimeters dependant on the environment. GeoSLAM provides trajectories at 100Hz, which are in the same coordinate system as the corresponding 3D point cloud model.

Calibration

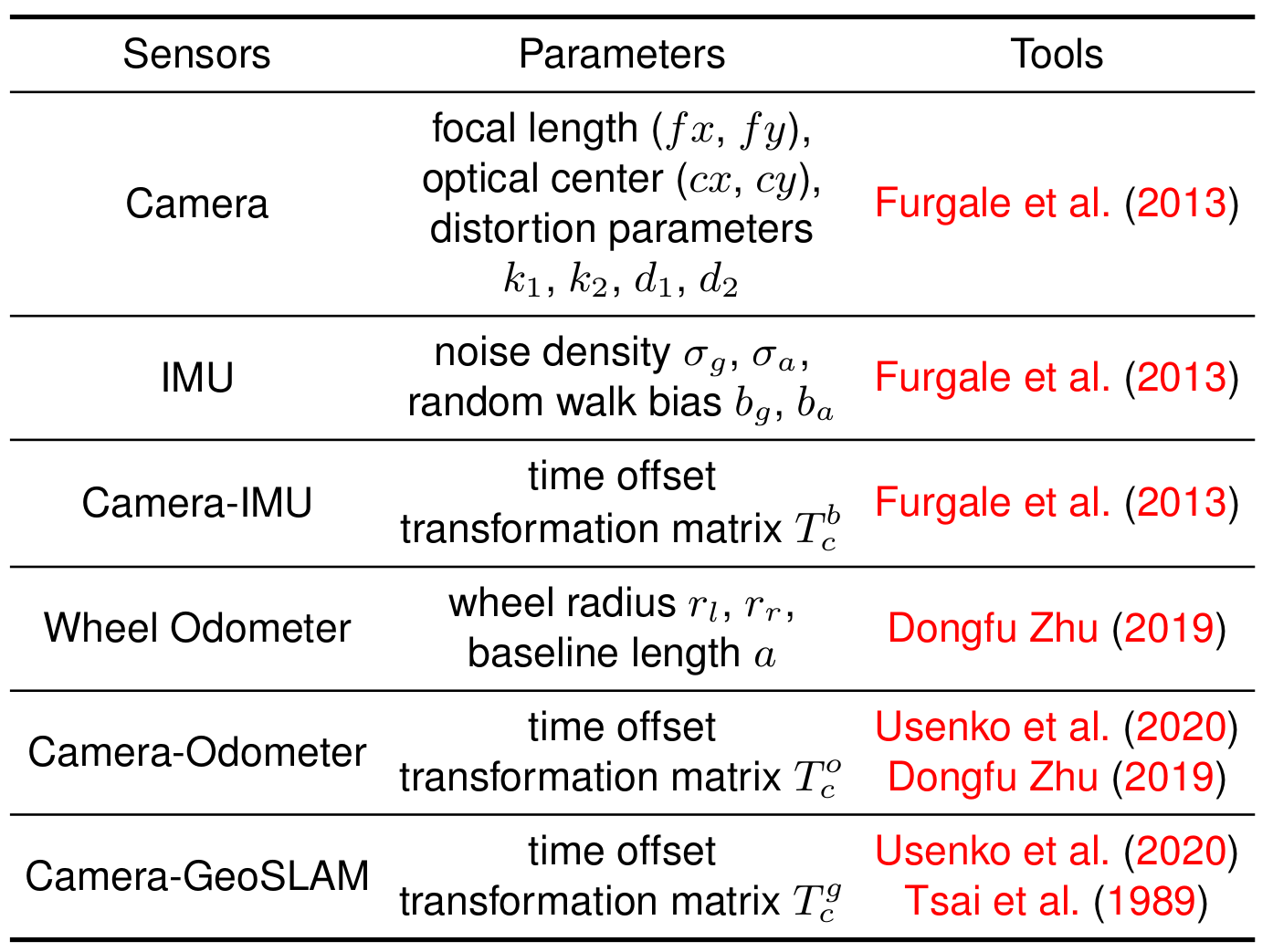

Our benchmark provides accurate intrinsic and extrinsic parameters calibration and time synchronization for different sensors and the 3D laser scanner, as shown in the table below. All the parameters are reported in calibration.yaml.

Acknowledgement

-

[1] Furgale P, Rehder J and Siegwart R (2013) Unified temporal and spatial calibration for multi-sensor systems. In: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems. pp. 1280–1286. DOI:10.1109/IROS.2013.6696514.

[2] Dongfu Zhu ZC (2019) Calibrate the internel parameters and extrin- sec parameters between camera and odometer. https://github.com/MegviiRobot/CamOdomCalibraTool

[3] Usenko V, Demmel N, Schubert D, Stueckler J and Cremers D (2020) Visual-inertial mapping with non-linear factor recovery. IEEE Robotics and Automation Letters (RA-L) & Int. Conference on Intelligent Robotics and Automation (ICRA) 5(2): 422–429. DOI:10.1109/LRA.2019.2961227.

[4] Tsai RY, Lenz RK et al. (1989) A new technique for fully autonomous and efficient 3 d robotics hand/eye calibration. IEEE Transactions on robotics and automation 5(3): 345–358.